From 'Data Drought' to 'Data Deluge'

Data in healthcare

There can be few areas of modern life that have not been profoundly influenced by information technology and the rapid and easy availability of data: it is hard to imagine a world without internet banking, online shopping or email. In contrast to other areas of our lives, the world of healthcare has, due to a vast legacy of notes, an inherent complexity of services, regulatory difficulties, legitimate privacy concerns and perhaps a degree of professional conservatism, been slower to adapt to the information revolution. As a result, most medical data remains machine-unreadable. Hidden away and unstructured in paper notes and incompatible systems. This is a lost opportunity. First of all for the patient. For a treatment decision to be sound, it must take holistic account of the totality of the patient’s history and not just a snapshot from the clinic: impossible if records are ‘siloed’. Secondly, for society. Increasingly we realise just how heterogeneous both individuals, disease and the interaction of the two are. If healthcare data, with all its inherent complexity, were more accessible and amenable to automated analysis then perhaps hitherto unappreciated patterns with prognostic and treatment importance might emerge. Medical data is now increasingly available in electronic form. A sizeable proportion of US healthcare providers now use electronic systems. The ill-fated NHS’s National Programme for Information Technology was rather less effective in promoting change, but the UK is now finally catching up and electronic healthcare is here to stay.1

Big data and intensive care

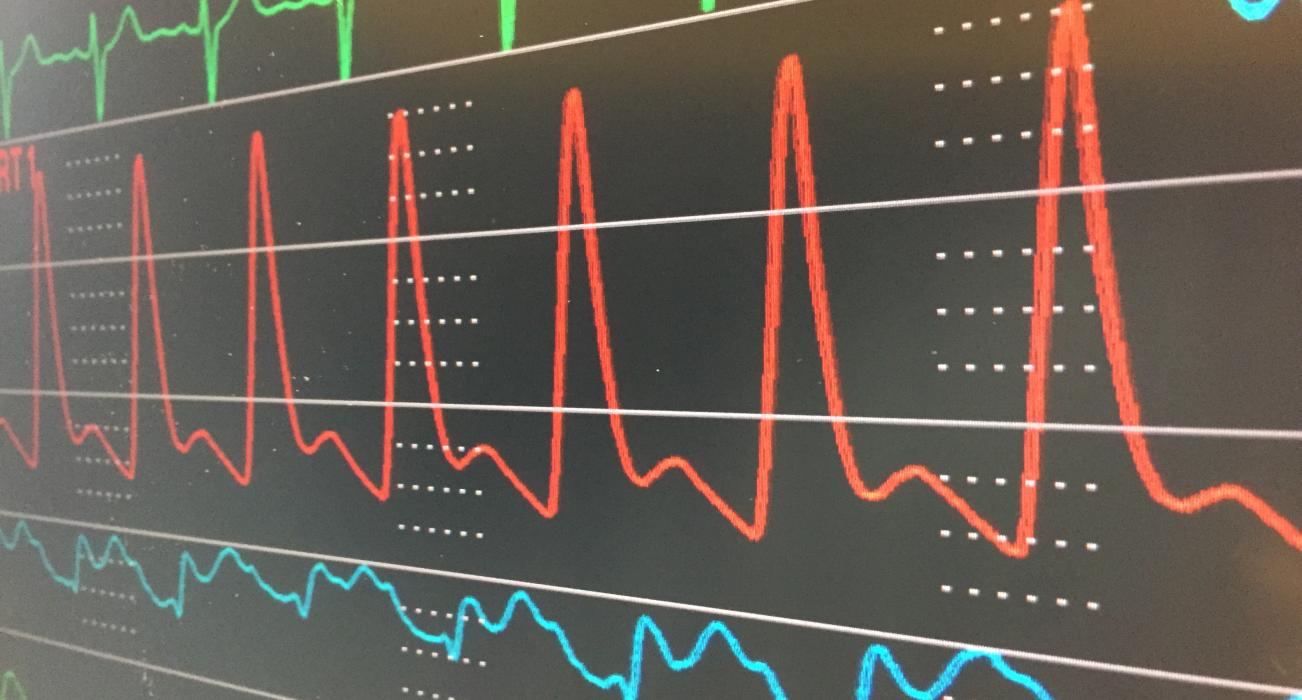

Of all the areas of medicine, critically ill patients who have the misfortune to need the Intensive Care Unit (ICU) provide us with examples of by far the most data-dense episodes of patient care imaginable. Patients on the ICU are some of the most profoundly sick in healthcare and they can, and do, deteriorate in a life-threatening way even over a matter of minutes (sometimes even seconds). As a result, they are more extensively monitored and investigated than any other group in the hope of detecting and intervening in a timely way to prevent harm or even death. Continuous measurement of heart function, blood pressures and life-support information generates gigabytes of data per day. This poses its own problem: it is impossible for a clinician to spot potentially complex inter-relationships between different parameters over time. As a result, we use very little of the data that we collect. This is an example of what has become known as ‘Big Data’: data that is so voluminous or otherwise complex that humans struggle to make sense of it.

Humans are good at making complex (and even satisfactory life or death) decisions based on limited or imperfect information, weighing this up with judgements and understanding regarding individual preferences and wishes. Few would seriously suggest that computers will take this away. On the other hand, computers are able to process large volumes of data searching for patterns. Algorithms to search for, and make inferences from, complex associations in Big Data have been developed, primarily in other fields such as commerce and engineering and are becoming increasingly sophisticated. These techniques, which fall under the umbrella of ‘Artificial Intelligence’, include a variety of so-called ‘Machine Learning’ strategies that allow computers to ‘learn’ associations in data.

This, of course, assumes that such associations exist and are missed by humans. But there is evidence that they do and are. Recently, we used anonymous routine data to look for features that might predict unexpected readmission to ICU. Such readmissions, which result from subsequent unexpected life-threatening deterioration in patients who were stepped down to the ward are important: a number of studies have linked unexpected readmission to increases in hospital length of stay and even mortality. Since the doctors making the decision to discharge the patient were in full possession of the plethora of recent and historical results, presumably such events are simply an unpredictable matter of chance? Not so, it turns out. By applying contemporary machine learning algorithms to a set of 76 parameters, we were able to credibly predict many such readmissions and indeed we not only outperformed the human clinicians, but also simple scoring systems which have been described previously.2

Big Data approaches allow us to understand things that we could not before. Dr Chris Meiring (2010), who previously studied Medicine, is currently undertaking an MPhil at Magdalene using Machine Learning to look at the logical framework behind the ethics of ICU admission decision-making. The best medical decisions are made when the physician has the most accurate information. This is particularly the case with ethically challenging decisions; clear, accurate information provides the proper context. The ICU is an environment in which ethical principles become real decisions every day; the duty of care to each patient in terms of not inflicting necessarily painful, degrading and burdensome treatments when they may in fact be futile and the just allocation of limited resources are always in balance. At the same time, to deny someone such treatments on the assumption of futility without further assessment is self-fulfilling. However, we do not know where that balance is. How long should we treat before we can predict with sufficient confidence what the outcome may be so that the patient (or clinician, in partnership with the relatives of those patients who lack the capacity to speak for themselves) may make a decision about what is an acceptable balance of outcome and burden?

Tools have been developed to aid prognostication in the intensive care setting. However, the most widely used tools have major drawbacks. For example, they typically use only information collected on admission; definitive prognostic decisions are often made after several days however. Chris’s work aims to tackle this. Does it make sense to keep patients for several days on a precious intensive care bed before making a definitive decision? Does each new day add new information which significantly improves prognostic ability? We are using both traditional statistical methods and machine learning algorithms to build the best predictive models we can for each progressive day in intensive care, based on objective, observable parameters. Comparing these will show us whether days two and three add important new information for prognostication. If they do, then a new predictive model which takes them into account could substantially improve on the current tools. If not, then a question is raised about whether definitive prognostic decisions should be made earlier.

The project has required Chris to engage with new challenges including complex statistical modelling, parallel processing and management of computing resources, and the clear graphical representation of complicated results. He has been stretched in expanding the limits of his computing and statistical knowledge whilst confronting difficult ethical questions.

However, the closer we look at data, the more we find. There is hidden information, folded unseen within the many waveforms and traces that are continuously recorded in ICU. Dr Stephen Eglen (2017), Official Fellow in Mathematics at Magdalene and Reader in Computational Neuroscience, and I are working together on an innovative project under the auspices of the Cantab Capital Institute for the Mathematics of Information to examine the information content and structure in data from patients with severe neurotrauma in the hope of identifying new, hitherto unrecognised signatures of disease within the signals recorded from the body and the brain.

Where now?

I have no doubt that Artificial Intelligence and Machine Learning techniques will become commonplace in medicine and UK academics are well placed to be at the vanguard of such developments. To study Big Data we need large amounts of data. To date, the largest research repository for ICU data is MIMIC III in the US. Cambridge has played its part as we have helped to establish the Critical Care Health Informatics Collaborative (CCHIC).3 This database continues to grow and now rivals the US counterpart in the number of patients included, but also has the advantage of being multi-centre and linkable allowing us to address questions about the long-term impact of intensive care on survivors once they go home, a hugely important area that has nevertheless achieved little attention. The CCHIC dataset is still relatively new but it underpins Chris’s work, and we hope that it will begin to rapidly bear scientific fruit.

Medical data is inherently potentially sensitive and precious to us and this must be respected, particularly when we step beyond the individual and look to the population. There is nothing new about using potentially sensitive medical data to understand population health. However, of particular public concern is the involvement of industry, in many ways pioneers of Big Data technology. The recent high-profile DeepMind case shows how rapidly public faith can be eroded when data is not properly governed, despite good intentions.4 Online banking demonstrates that it is possible to keep large volumes of highly sensitive data secure, even in the hands of industry. However it is for researchers to convince society of the benefits for patients of Big Data research in medicine and demonstrate our ongoing trustworthiness.

Finally, we need to ensure that our doctors and healthcare professionals of the future are equipped for a potential paradigm-shift in medical research. We should not aspire to replace the doctor. Treatment decisions at the individual level are deeply personal and ultimately require the intangible qualities that allow humans to understand each other’s personal and cultural perspectives. No matter how clever algorithms become, uncertainty will always remain and only humans can deal with this in a societally and individually acceptable way. Nevertheless, I think it is likely that data-driven techniques will become increasingly important in medicine. Such approaches are fundamentally transdisciplinary and require a confluence of expertise from both medicines but also computing, mathematics and data science. Our healthcare professionals need to be equipped for this Tower of Babel, at very least, with the appropriate languages to make informed decisions based on new evidence.

Dr Ari Ercole, Fellow in Clinical Medicine

References

- Wachter RM. Making IT Work: Harnessing the Power of Health Information Technology to Improve Care in England. Department of Health and Social Care: Report of the National Advisory Group on Health Information Technology in England; 2016.

- Desautels T, Das R, Calvert J, Trivedi M, Summers C, Wales DJ, et al. Prediction of early unplanned intensive care unit readmission in a UK tertiary care hospital: a cross-sectional machine learning approach. BMJ Open. 2017;7(9):e017199.

- Harris S, Shi S, Brealey D, MacCallum NS, Denaxas S, Perez-Suarez D, et al. Critical Care Health Informatics Collaborative (CCHIC): Data, tools and methods for reproducible research: A multi-centre UK intensive care database. Int J Med Inform. 2018;112:82–89.

- Shah H. The DeepMind debacle demands dialogue on data. Nature. 2017;547(7663):259.